目录

一、安装Julia

IDE是Atom,安装和使用教程为:Windows10 Atom安装和运行Julia的使用教程(详细)

二、Flux简介

1.Flux.jl是一个内置于Julia的机器学习框架。它与PyTorch有一些相似之处,就像大多数现代框架一样。

2.Flux是一种优雅的机器学习方法。 它是100%纯Julia堆栈形式,并在Julia的原生GPU和AD支持之上提供轻量级抽象。

3.Flux是一个用于机器学习的库。 它功能强大,具有即插即拔的灵活性,即内置了许多有用的工具,但也可以在需要的地方使用Julia语言的全部功能。

4.Flux遵循以下几个关键原则:

(1) Flux对于正则化或嵌入等功能的显式API相对较少。 相反,写下数学形式将起作用 ,并且速度很快。

(2) 所有的知识和工具,从LSTM到GPU内核,都是简单的Julia代码。 如果有疑问的话,可以查看官方教程。 如果需要不同的函数块或者是功能模块,我们也可以轻松自己动手实现。

(3)Flux适用于Julia库,包括从数据帧和图像到差分方程求解器等等内容,因此我们也可以轻松构建集成Flux模型的复杂数据处理流水线。

5.Flux相关教程链接(FQ):https://fluxml.ai/Flux.jl/stable/

6.Flux模型代码示例链接:https://github.com/FluxML/model-zoo/

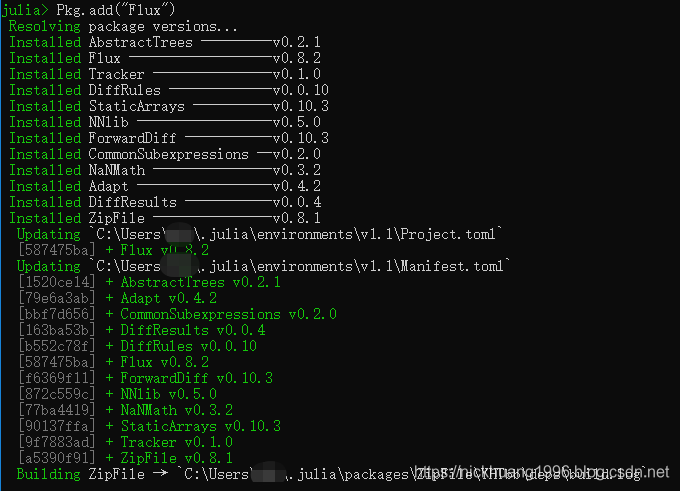

三、安装Flux和相关依赖库

1.打开julia控制台,或者打开Atom启动下方REPL的julia,先输入如下指令

using Pkg

2.安装Flux

Pkg.add("Flux")

3.同理,安装依赖项Metalhead

Pkg.add("Metalhead")

Pkg.add("Images")

Pkg.add("Statistics")

一般安装了Metalhead也会自动帮你装上Images和Statistics~

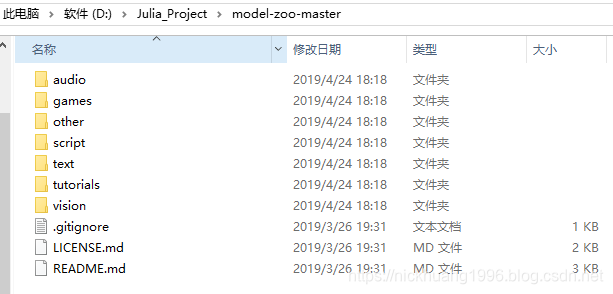

四、cifar10项目下载

1.下载model-zoo文件夹:https://github.com/FluxML/model-zoo/

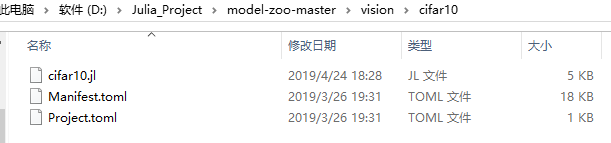

2.cifar10.jl在model-zoo-master\vision\cifar10中

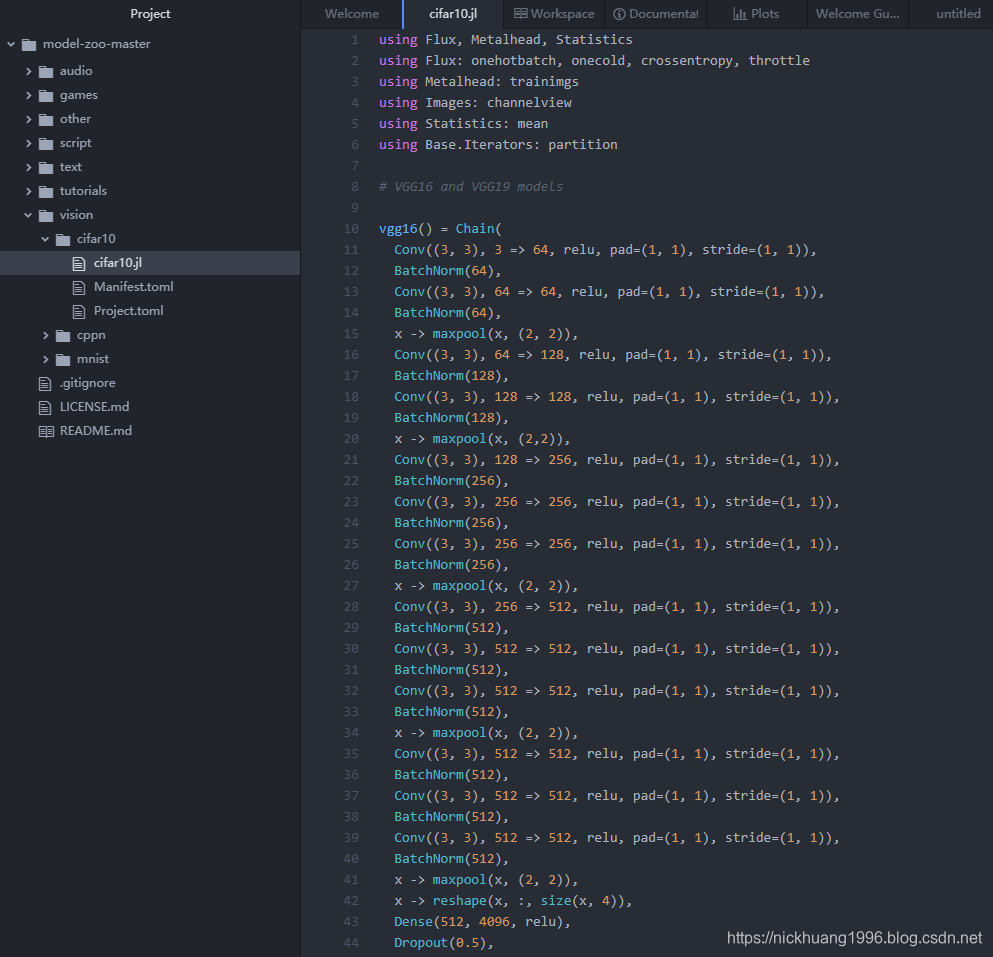

3.我们在Atom里打开这个项目,如下

*五、cifar10数据集下载

1.github上的model-zoo里cifar10的下载函数里面解压的方式是Linux的,在

C:\Users\你的电脑用户名\.julia\packages\Metalhead\fYeSU\src\datasets\autodetect.jl:

-

function download(which)

-

if which === ImageNet

-

error("ImageNet is not automatiacally downloadable. See instructions in datasets/README.md")

-

elseif which == CIFAR10

-

local_path = joinpath(@__DIR__, "..","..",datasets, "cifar-10-binary.tar.gz")

-

#print(local_path)

-

dir_path = joinpath(@__DIR__,"..","..","datasets")

-

if(!isdir(joinpath(dir_path, "cifar-10-batches-bin")))

-

if(!isfile(local_path))

-

Base.download("https://www.cs.toronto.edu/~kriz/cifar-10-binary.tar.gz", local_path)

-

end

-

run(`tar -xzvf $local_path -C $dir_path`)

-

end

-

else

-

error("Download not supported for $(which)")

-

end

-

end

这意味着解压函数在windows10上是无效的,但是这并不影响我们在windows上的使用,我们只需要手动下载即可

2.下载地址:https://www.cs.toronto.edu/~kriz/cifar-10-binary.tar.gz

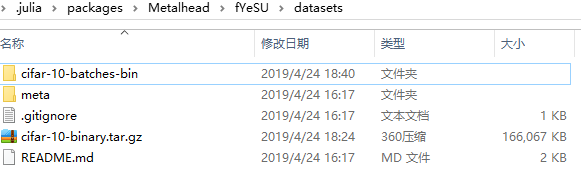

3.下载完成后请放到这个文件夹(其实是放到这里是为了配合Linux操作系统):

C:\Users\你的电脑用户名\.julia\packages\Metalhead\fYeSU\datasets

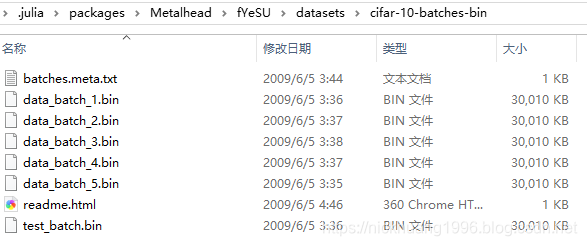

解压后的内容如下:

注意:不放这里,你就等着报错报到死吧!!那就是无法找到cifar10数据集位置!!

六、开始训练

1.核心代码

cifar10.jl

-

using Flux, Metalhead, Statistics

-

using Flux: onehotbatch, onecold, crossentropy, throttle

-

using Metalhead: trainimgs

-

using Images: channelview

-

using Statistics: mean

-

using Base.Iterators: partition

-

-

# VGG16 and VGG19 models

-

-

vgg16() = Chain(

-

Conv((3, 3), 3 => 64, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(64),

-

Conv((3, 3), 64 => 64, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(64),

-

x -> maxpool(x, (2, 2)),

-

Conv((3, 3), 64 => 128, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(128),

-

Conv((3, 3), 128 => 128, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(128),

-

x -> maxpool(x, (2,2)),

-

Conv((3, 3), 128 => 256, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(256),

-

Conv((3, 3), 256 => 256, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(256),

-

Conv((3, 3), 256 => 256, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(256),

-

x -> maxpool(x, (2, 2)),

-

Conv((3, 3), 256 => 512, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(512),

-

Conv((3, 3), 512 => 512, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(512),

-

Conv((3, 3), 512 => 512, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(512),

-

x -> maxpool(x, (2, 2)),

-

Conv((3, 3), 512 => 512, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(512),

-

Conv((3, 3), 512 => 512, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(512),

-

Conv((3, 3), 512 => 512, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(512),

-

x -> maxpool(x, (2, 2)),

-

x -> reshape(x, :, size(x, 4)),

-

Dense(512, 4096, relu),

-

Dropout(0.5),

-

Dense(4096, 4096, relu),

-

Dropout(0.5),

-

Dense(4096, 10),

-

softmax) |> gpu

-

-

vgg19() = Chain(

-

Conv((3, 3), 3 => 64, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(64),

-

Conv((3, 3), 64 => 64, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(64),

-

x -> maxpool(x, (2, 2)),

-

Conv((3, 3), 64 => 128, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(128),

-

Conv((3, 3), 128 => 128, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(128),

-

x -> maxpool(x, (2, 2)),

-

Conv((3, 3), 128 => 256, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(256),

-

Conv((3, 3), 256 => 256, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(256),

-

Conv((3, 3), 256 => 256, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(256),

-

Conv((3, 3), 256 => 256, relu, pad=(1, 1), stride=(1, 1)),

-

x -> maxpool(x, (2, 2)),

-

Conv((3, 3), 256 => 512, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(512),

-

Conv((3, 3), 512 => 512, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(512),

-

Conv((3, 3), 512 => 512, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(512),

-

Conv((3, 3), 512 => 512, relu, pad=(1, 1), stride=(1, 1)),

-

x -> maxpool(x, (2, 2)),

-

Conv((3, 3), 512 => 512, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(512),

-

Conv((3, 3), 512 => 512, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(512),

-

Conv((3, 3), 512 => 512, relu, pad=(1, 1), stride=(1, 1)),

-

BatchNorm(512),

-

Conv((3, 3), 512 => 512, relu, pad=(1, 1), stride=(1, 1)),

-

x -> maxpool(x, (2, 2)),

-

x -> reshape(x, :, size(x, 4)),

-

Dense(512, 4096, relu),

-

Dropout(0.5),

-

Dense(4096, 4096, relu),

-

Dropout(0.5),

-

Dense(4096, 10),

-

softmax) |> gpu

-

-

# Function to convert the RGB image to Float64 Arrays

-

-

getarray(X) = Float32.(permutedims(channelview(X), (2, 3, 1)))

-

-

# Fetching the train and validation data and getting them into proper shape

-

-

X = trainimgs(CIFAR10)

-

imgs = [getarray(X[i].img) for i in 1:50000]

-

labels = onehotbatch([X[i].ground_truth.class for i in 1:50000],1:10)

-

train = gpu.([(cat(imgs[i]..., dims = 4), labels[:,i]) for i in partition(1:49000, 100)])

-

valset = collect(49001:50000)

-

valX = cat(imgs[valset]..., dims = 4) |> gpu

-

valY = labels[:, valset] |> gpu

-

-

# Defining the loss and accuracy functions

-

-

m = vgg16()

-

-

loss(x, y) = crossentropy(m(x), y)

-

-

accuracy(x, y) = mean(onecold(m(x), 1:10) .== onecold(y, 1:10))

-

-

# Defining the callback and the optimizer

-

-

evalcb = throttle(() -> @show(accuracy(valX, valY)), 10)

-

-

opt = ADAM()

-

-

# Starting to train models

-

-

Flux.train!(loss, params(m), train, opt, cb = evalcb)

-

-

# Fetch the test data from Metalhead and get it into proper shape.

-

# CIFAR-10 does not specify a validation set so valimgs fetch the testdata instead of testimgs

-

-

test = valimgs(CIFAR10)

-

-

testimgs = [getarray(test[i].img) for i in 1:10000]

-

testY = onehotbatch([test[i].ground_truth.class for i in 1:10000], 1:10) |> gpu

-

testX = cat(testimgs..., dims = 4) |> gpu

-

-

# Print the final accuracy

-

-

@show(accuracy(testX, testY))

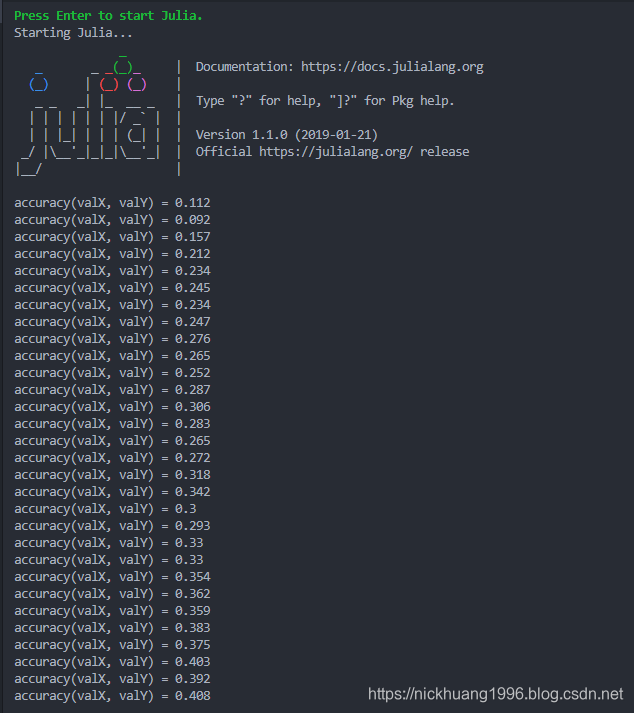

2.菜单栏里 Packages->Julia->Run File,可以在REPL里看到训练的效果,也就是最后一句代码展示准确度

3.至于如何放到GPU上训练,我们还需要下载CuArrays:

-

Using Pkg

-

Pkg.add("CuArrays")

以及安装CUDA和cuDNN支持,具体细节看官方文档:https://fluxml.ai/Flux.jl/stable/gpu/#Installation-

文章来源: nickhuang1996.blog.csdn.net,作者:悲恋花丶无心之人,版权归原作者所有,如需转载,请联系作者。

原文链接:nickhuang1996.blog.csdn.net/article/details/89500786